Hard Failover

Hard failover allows a node to be removed from a cluster reactively, because the node has become unavailable or unstable.

Understanding Hard Failover

Hard failover drops a node from a cluster reactively, because the node has become unavailable or unstable. It is manually or automatically initiated, and occurs after the point at which active vBuckets have been lost.

The automatic initiation of hard failover is known as automatic failover, and is configured by means of the Node Availability screen, in the Settings area of Couchbase Web Console, or by means of equivalent CLI and REST API commands. This page explains how to initiate hard failover manually.

A complete conceptual description of failover and its variants (including hard) is provided in Failover.

Examples on This Page

The examples in the subsections below show fail the same node over gracefully, from the same two-node cluster; using the UI, the CLI, and the REST API respectively. The examples assume:

-

A two-node cluster already exists; as at the conclusion of Join a Cluster and Rebalance.

-

The cluster has the Full Administrator username of

Administrator, and password ofpassword.

Hard Failover with the UI

Proceed as follows:

-

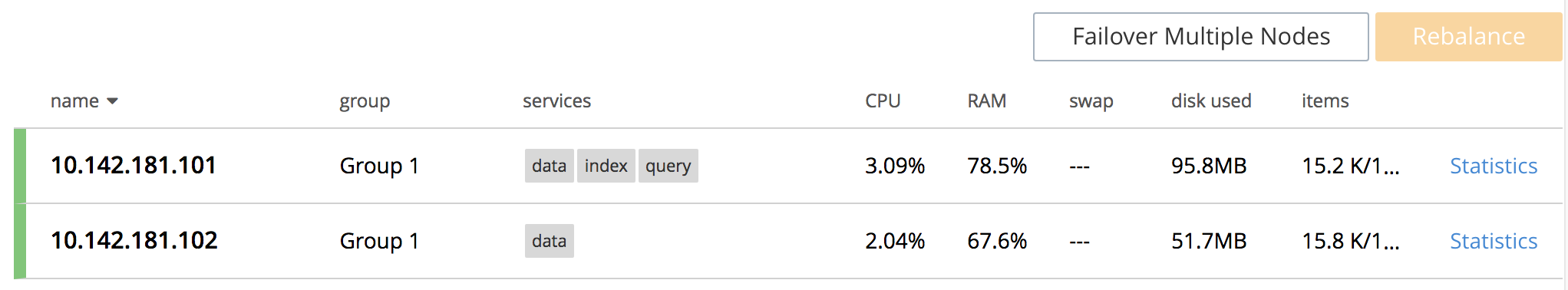

Access the Couchbase Web Console Servers screen, on node

10.142.181.101, by left-clicking on the Servers tab in the left-hand navigation bar. The display is as follows:

-

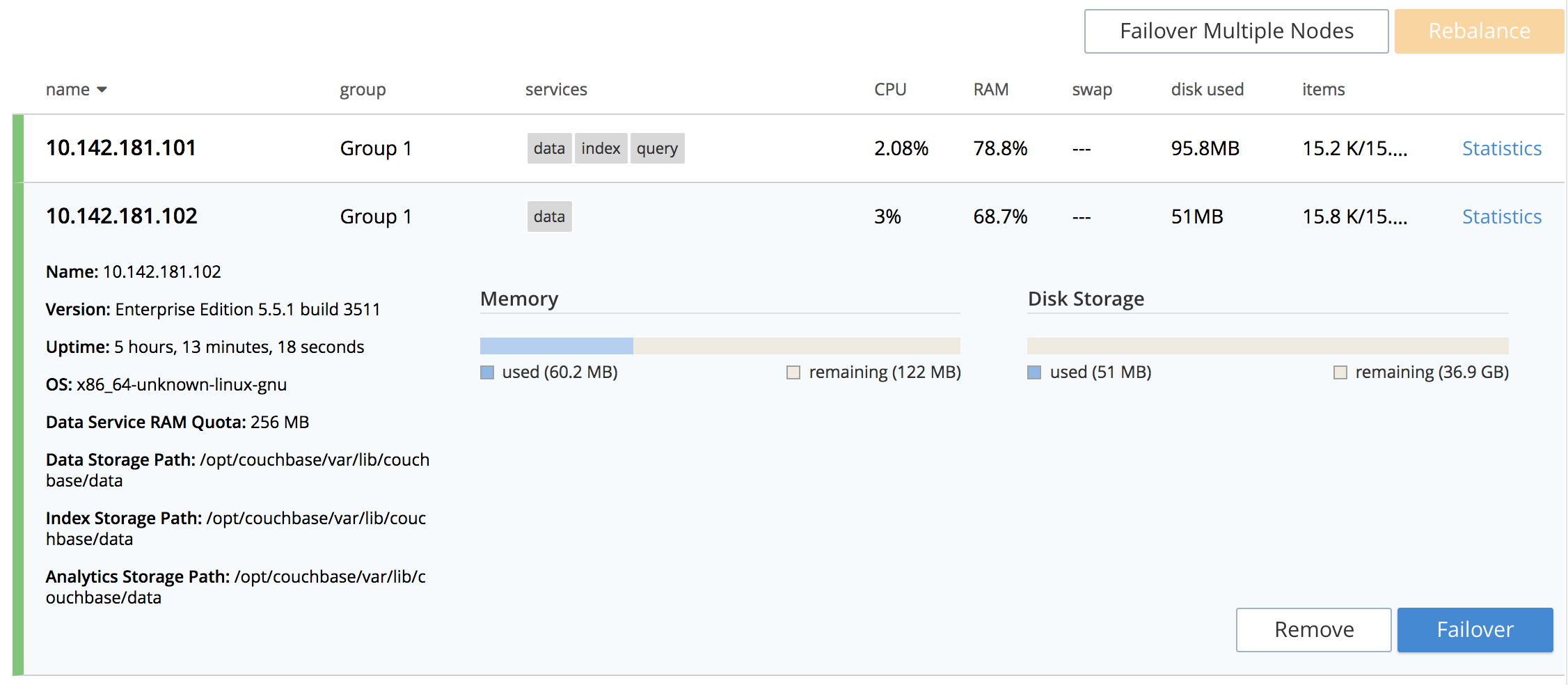

To see further details of each node, left-click on the row for the node. The row expands vertically, as follows:

-

To initiate failover, left-click on the Failover button, at the lower right of the row for

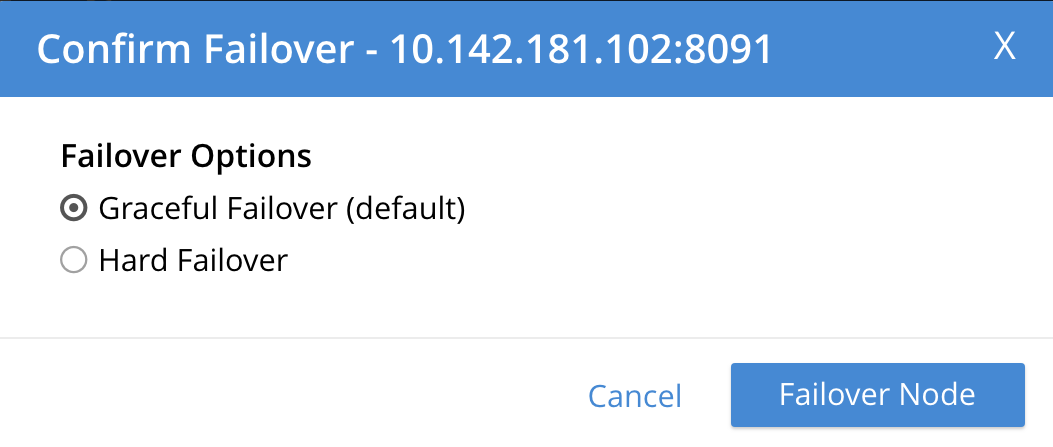

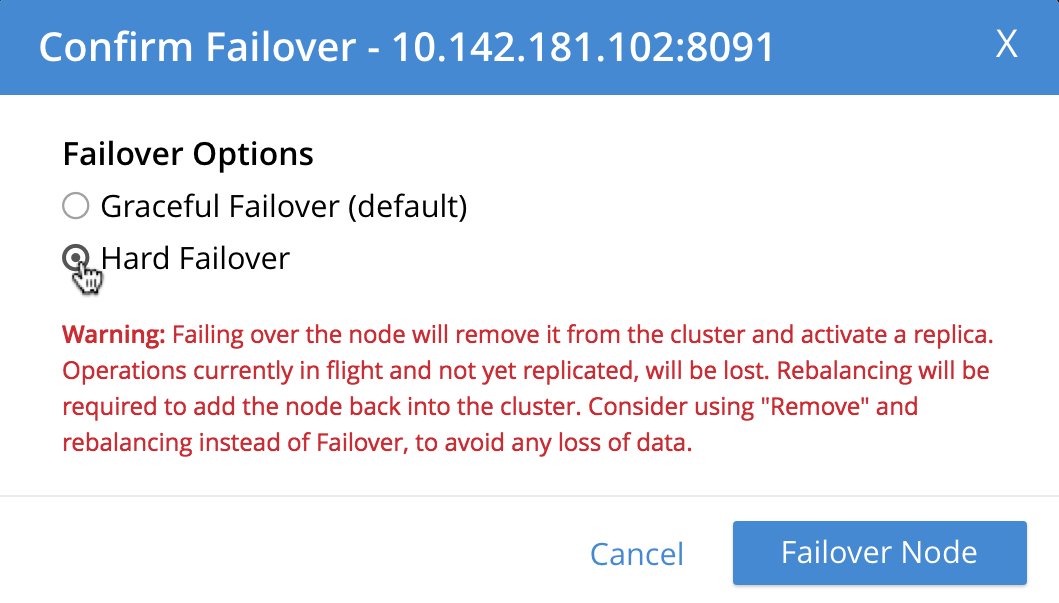

101.142.181.102:The Confirm Failover Dialog now appears:

Two radio buttons are provided, to allow selection of either Graceful or Hard failover. Graceful is selected by default.

-

Select hard failover by selecting the Hard radio button:

Note the warning message that appears when hard failover is selected: in particular, this points out that hard failover may interrupt ongoing writes and replications, and that therefore it may be better to Remove a Node and Rebalance, than use hard failover on a still-available Data Service node.

To continue with hard failover, confirm your choice by left-clicking on the Failover Node button.

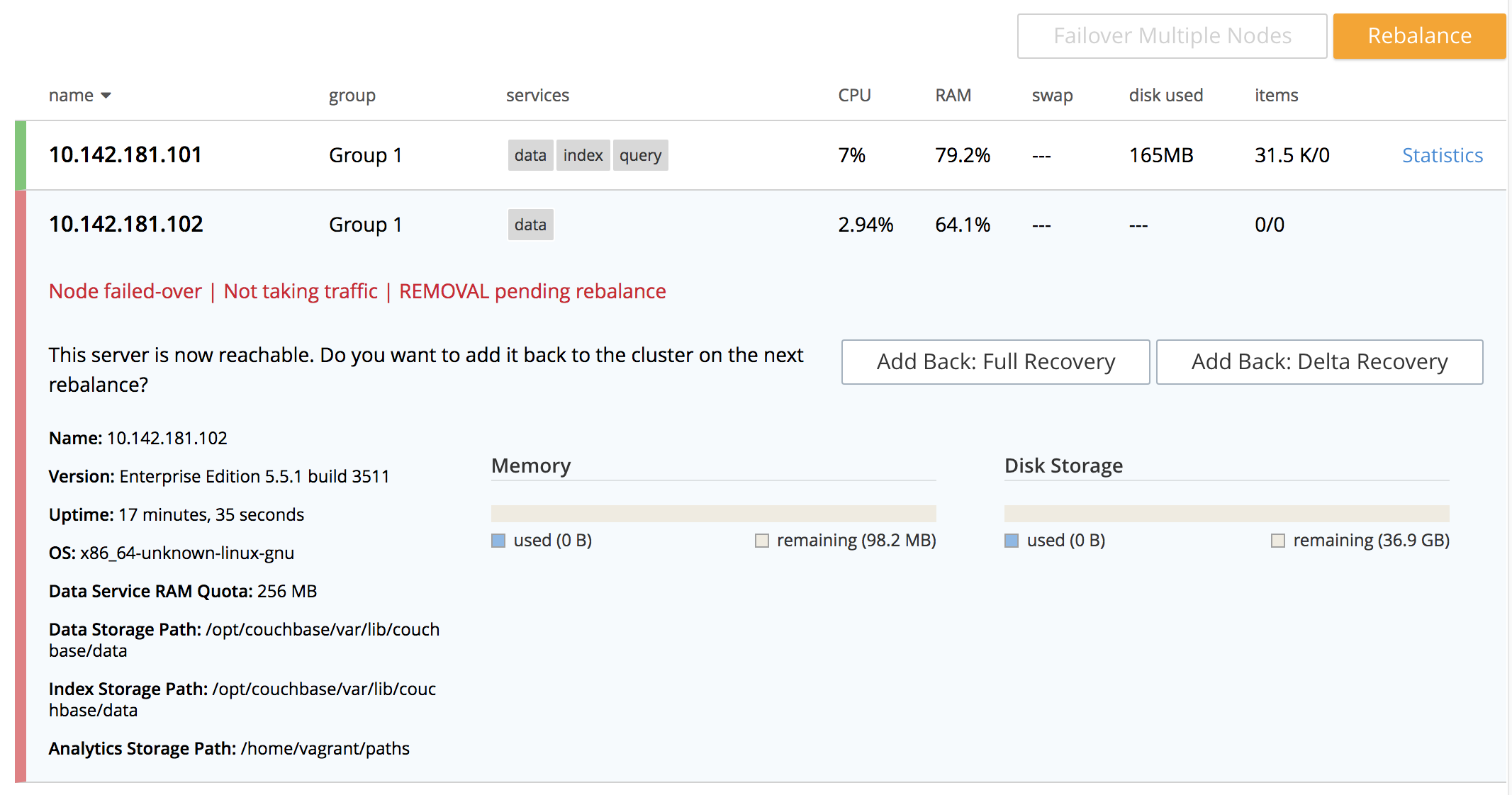

Hard failover now occurs. On conclusion, the Servers screen appears as follows:

This indicates that hard failover has successfully completed, but a rebalance is required to complete the reduction of the cluster to one node.

-

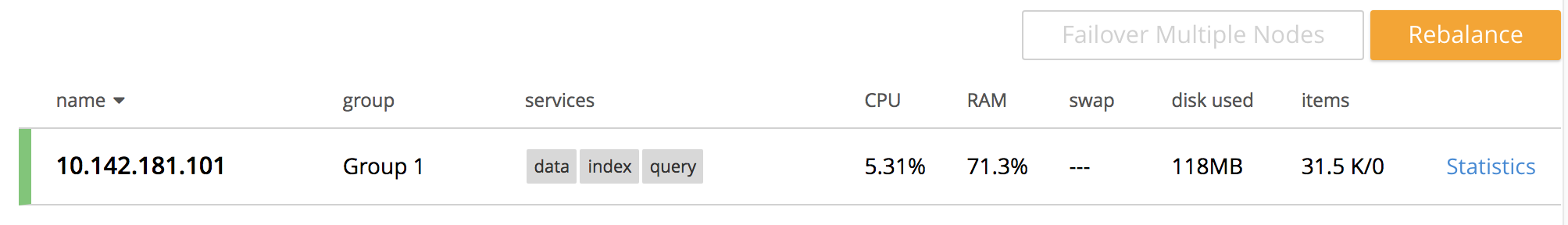

Left-click the Rebalance button, at the upper right, to initiate rebalance. When the process is complete, the Server screen appears as follows:

Node

10.142.181.102has successfully been removed.

Hard Failover with the CLI

To perform hard failover on a node, use the failover command with the --force flag, as follows:

couchbase-cli failover -c 10.142.181.102:8091 \ --username Administrator \ --password password \ --server-failover 10.142.181.102:8091 --force

The --force flag specifies that failover be hard.

When the progress completes successfully, the following output is displayed:

SUCCESS: Server failed over

The cluster can now be rebalanced with the following command, to remove the failed over node:

couchbase-cli rebalance -c 10.142.181.101:8091 \ --username Administrator \ --password password --server-remove 10.142.181.102:8091

Progress is displayed as console output. If successful, the operation gives the following output:

SUCCESS: Rebalance complete

For more information on failover, see

cli:cbcli/couchbase-cli-failover.adoc. For

more information on rebalance, see

cli:cbcli/couchbase-cli-rebalance.adoc.

Hard Failover with the REST API

To perform hard failover on a node over gracefully with the REST API, use the /controller/failover URI, specifying the node to be failed over, as follows:

curl -v -X POST -u Administrator:password \ http://10.142.181.101:8091/controller/failOver \ -d 'otpNode=ns_1@10.142.181.102'

Subsequently, the cluster can be rebalanced, and the failed over node removed, with the /controller/rebalance URI:

curl -u Administrator:password -v -X POST \ http://10.142.181.101:8091/controller/rebalance \ -d 'ejectedNodes=ns_1%4010.142.181.102' \ -d 'knownNodes=ns_1%4010.142.181.101%2Cns_1%4010.142.181.102'

For more information on /controller/failover, see Failing Over Nodes.

For more information on /controller/rebalance, see Rebalancing Nodes.

Next Steps

A node that has been failed over can be recovered and reintegrated into the cluster. See Recover a Node.